글로벌 연구동향

의학물리학

- [Med Phys .] Sparsier2Sparse: Self-supervised convolutional neural network-based streak artifacts reduction in sparse-view CT imagesSparsier2Sparse: 자기지도 신경망 기반 희소뷰 CT 영상의 줄무늬 아티팩트 감소 연구

연세대 / 김성준, 백종덕*

- 출처

- Med Phys .

- 등재일

- 2023 Dec

- 저널이슈번호

- 50(12):7731-7747. doi: 10.1002/mp.16552. Epub 2023 Jun 11.

- 내용

-

Abstract

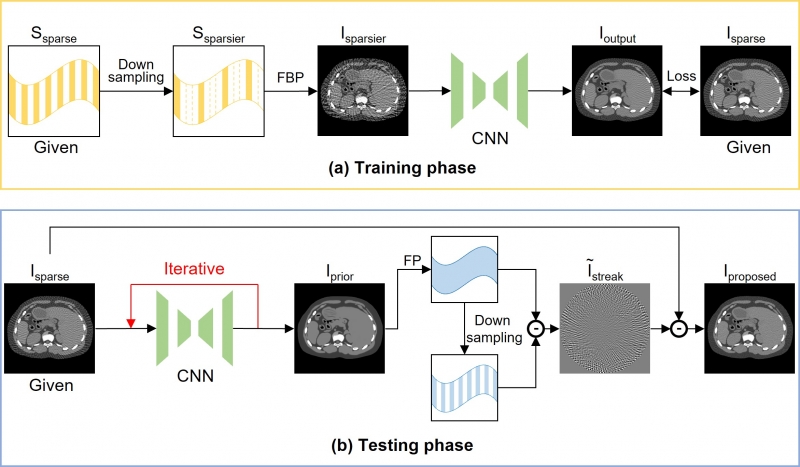

Background: Sparse-view computed tomography (CT) has attracted a lot of attention for reducing both scanning time and radiation dose. However, sparsely-sampled projection data generate severe streak artifacts in the reconstructed images. In recent decades, many sparse-view CT reconstruction techniques based on fully-supervised learning have been proposed and have shown promising results. However, it is not feasible to acquire pairs of full-view and sparse-view CT images in real clinical practice.Purpose: In this study, we propose a novel self-supervised convolutional neural network (CNN) method to reduce streak artifacts in sparse-view CT images.

Methods: We generate the training dataset using only sparse-view CT data and train CNN based on self-supervised learning. Since the streak artifacts can be estimated using prior images under the same CT geometry system, we acquire prior images by iteratively applying the trained network to given sparse-view CT images. We then subtract the estimated steak artifacts from given sparse-view CT images to produce the final results.

Results: We validated the imaging performance of the proposed method using extended cardiac-torso (XCAT) and the 2016 AAPM Low-Dose CT Grand Challenge dataset from Mayo Clinic. From the results of visual inspection and modulation transfer function (MTF), the proposed method preserved the anatomical structures effectively and showed higher image resolution compared to the various streak artifacts reduction methods for all projection views.

Conclusions: We propose a new framework for streak artifacts reduction when only the sparse-view CT data are given. Although we do not use any information of full-view CT data for CNN training, the proposed method achieved the highest performance in preserving fine details. By overcoming the limitation of dataset requirements on fully-supervised-based methods, we expect that our framework can be utilized in the medical imaging field.

Affiliations

Seongjun Kim 1, Byeongjoon Kim 2, Jooho Lee 2, Jongduk Baek 2 3

1School of Integrated Technology, Yonsei University, Incheon, South Korea.

2Department of Artificial Intelligence, College of Computing, Yonsei University, Seoul, South Korea.

3Bareunex Imaging, Inc., Seoul, South Korea.

- 키워드

- computed tomography; convolutional neural network; self-supervised learning; sparse-view CT.

- 연구소개

- 본 연구는 다량의 학습데이터셋을 요구하는 supervised-learning 기반 딥러닝 방법의 문제점을 해결하기 위해, label 학습데이터가 주어지지 않더라도 self-supervised에 기반하여 sparse-view CT 영상에서의 streak artifact를 효과적으로 줄이기 위한 방법을 제시합니다. 이에 sparse-view CT 영상만 주어졌을 때, 어떻게 하면 딥러닝 네트워크가 streak artifact를 제거하도록 학습할 수 있을지 연구하였습니다. 우선, 주어진 sparse-view CT 영상보다 심한 streak artifact를 가지는 sparsier-view CT 영상을 만듦으로써 네트워크를 학습시켰고, 학습된 네트워크를 주어진 sparse-view CT 영상에 점진적으로 적용함으로써 streak artifact를 효과적으로 저감하였습니다. 더 나아가, 네트워크가 여러 번 적용된 결과를 활용하여 원래의 streak artifact를 추정함으로써 해부학적 구조를 보다 label 데이터와 유사하게 보존할 수 있었습니다. 본 연구를 통해 sparse-view CT에서 단일 학습데이터만 주어지더라도 효과적으로 streak artifact 제거와 세부 구조 보존이 가능하였습니다. 실제 의료 분야에서는 데이터 확보가 어렵기에, 본 방법론을 통해 그 문제를 해결할 수 있을 것이라 생각합니다.

- 덧글달기